Social Media Alone Isn’t Making Us More Intolerant: Evidence from Myanmar

Polarization is rising, but not how you might think. For example, a study by the Carnegie Endowment for International Peace found that the United States isn’t polarized so much by ideology, but is rather experiencing affective, or identity-based, polarization. In other words, we dislike “the other side,” even if we don’t disagree with them all that much.

This shift has come alongside a seeming increase in online toxicity, leading some to connect the two phenomena. Many blame social media for curating in-group echo chambers which foster out-group enmity and for seeding animosity toward vulnerable communities. But is this belief justified?

There, the abrupt arrival of Facebook during the country’s political transition has been blamed for enabling violence by Myanmar’s military against minority Muslim communities, including the Rohingya.

This suggestion has become conventional wisdom in some circles. But we looked at evidence from Myanmar, and what we found was surprising: despite hate speech spreading across the site, Facebook users were measurably less intolerant than their offline counterparts.

These findings suggest that we need to treat affective polarization, and intolerant attitudes more generally, as multicausal phenomena, requiring a broad-based approach going beyond social media.

Myanmar Enters the Internet Age

After decades of military rule, Myanmar began a managed transition away from authoritarianism in 2011. With this came political and societal reforms—including broadened access to the Internet.

In 2010, 0.25 percent of Myanmar’s population used the Internet. By 2016, that increased by a factor of 100, to over a quarter. Facebook became the primary Internet gateway, used by roughly 95 percent of Internet users.

But Myanmar’s political transition—which ended in 2021 with a military coup—had a dark side. Violence between the Buddhist majority and Rohingya Muslims erupted in the western state of Rakhine in 2012, followed by increasingly vocal anti-Muslim sentiment across the country. In 2016–17, the military cracked down on the Rohingya, killing thousands and displacing nearly one million in what the United Nations (UN) called “ethnic cleansing.”

Online hate speech accompanied the brutality, and outside observers blamed Facebook for spreading intolerant rhetoric that, they argued, enabled the military. A widely viewed 20-minute monologue by late-night talk show host John Oliver faulted the site and its lack of content moderation for inflaming inter-group hatred. The claim was not that Facebook incited violence directly, but that it cultivated an intolerant online population more likely to approve of military atrocities targeting out-groups like the Rohingya.

Facebook seemed a volatile addition to a rapidly transforming political environment, but the specifics of its contribution remained uncertain. Even UN investigators, who claimed that the platform played a “determining role” in the violence, acknowledged that they didn’t fully understand how.

A Look at the Data

Many see social media as a uniquely toxic digital space that manipulates people into becoming more intolerant. Some suggest Myanmar would have been a more tolerant place without Facebook, potentially putting a brake on the military’s genocidal campaign. But our evidence undermines the suggestion that the platform was the primary driver of intolerant attitudes in Myanmar, complicating the causal story at the heart of this case.

To examine Facebook’s impact, we conducted a nationwide survey with Innovations for Poverty Action in 2018. We polled a representative sample of 4,280 citizens from across Myanmar’s 14 states and regions to compare levels of tolerance between those on Facebook and those not.

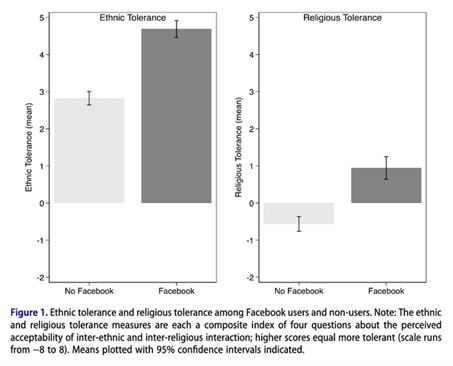

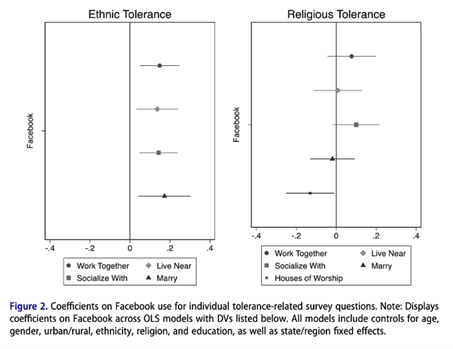

We asked whether it is acceptable to work with, live near, or marry someone from a different ethnicity or religion—questions which measure “social distance,” a core sociological component of affective polarization. These questions allowed us to quantify the public’s level of tolerance.

We found that while intolerance is high in Myanmar, it is highest among those least online. Moreover, more frequent use of Facebook did not correlate with higher levels of intolerance among Myanmar’s online community. This suggests that Facebook’s ability to influence inter-group attitudes is likely limited. It is unlikely that the arrival of the Internet instigated intolerant attitudes that would not have surfaced anyway.

These findings may have a simple explanation. Facebook users fit a demographic profile correlated with more tolerant attitudes toward minorities. Compared to Myanmar’s general population, Facebook users are younger and more urban. They are also more educated, which might make them more resistant to hateful online misinformation.

Ultimately, Myanmar’s military was the chief perpetrator of violence against the Rohingya, rather than ordinary people incited by social media. It isn’t clear that the platform made it easier for the military to target the Rohingya. In fact, anti-Rohingya campaigns similar to those in 2016–17 were undertaken by the military in 1978 and 1991–92, long predating social media. Facebook also wasn’t alone in spreading hate speech—similar messages spread through a variety of offline media and organizations, such as religious groups.

Global Lessons

Our study adds to a growing body of research that finds limitations to Facebook’s capacity to drive polarization. Social media is not unique in its potential to promote misinformation and intolerance—and it may not even be the leading driver of such animosity.

This doesn’t absolve Facebook for lapses in moderation. Nor do we claim that no harm came of Facebook’s failure to police hateful content—in Myanmar nor elsewhere.

But the experience of Myanmar tells us that political polarization, out-group intolerance, and violence are society-wide issues not easily confined to social media platforms. And, as other research has noted, the possible malevolent influence of social media likely has more to do with how bad actors wield such platforms, rather than the effects they have on users’ attitudes or beliefs.

If we’re going to respond effectively to these problems, we must understand more accurately how they manifest. That’s more challenging than relying on convenient assumptions that attribute outsized power to a single social media platform. Addressing real-world harms from affective polarization and out-group animosity requires more evidence—and action both online and offline.

Oren Samet is a Ph.D. candidate in political science at the UC Berkeley. Leonardo Arriola is professor of political science at the UC Berkeley. Aila Matanock is an associate professor at the UC Berkeley.

Thumbnail credit: Michael Coghlan (Flickr)

Global Policy At A Glance

Global Policy At A Glance is IGCC’s blog, which brings research from our network of scholars to engaged audiences outside of academia.

Read More